Microsoft Azure App-Fabric Caching Service Explained!

April 14, 2011 Leave a comment

MIX always comes with a mix of feelings…excitement at the prospect of trying out the new releases and the heartache that comes with trying to understand “in depth” the new technologies being released…and so starts the “googling..oops binging”,,,blogs,,,videos etc…What does it mean?? How does it impact me??

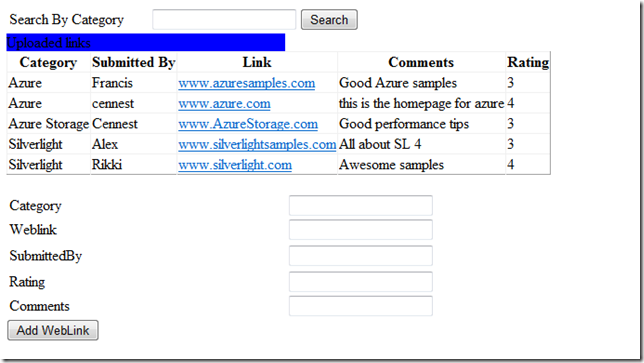

One such very important release at MIX 2011 is the AppFabric Caching Service …At Cennest we do a lot of Azure development and Migration work and this feature caught our immediate attention as something which will have a high impact on the architecture, cost and performance of new applications and Migrations .

So we collated information from various sources (references below ) and here is an attempt is simplify the explanation for you!

What is caching?

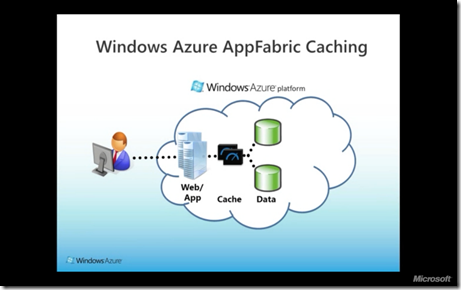

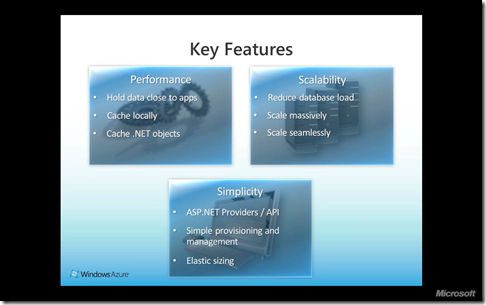

The Caching service is a distributed, in-memory, application cache service that accelerates the performance of Windows Azure and SQL Azure applications by allowing you to keep data in-memory and saving you the need to retrieve that data from storage or database.(Implicit Cost Benefit? Well depends on the costing of Cache service…yet to be released..)

Basically it’s a layer that sits between the Database and the application and which can be used to “store” data prevent frequent trips to the database thereby reducing latency and improving performance

How does this work?

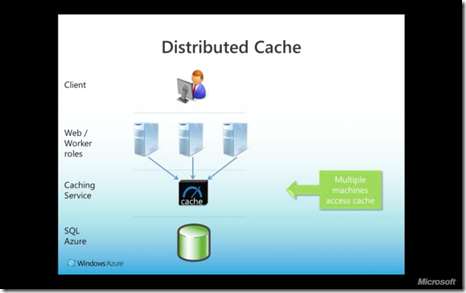

Think of the Caching service as Microsoft running a large set of cache clusters for you, heavily optimized for performance, uptime, resiliency and scale out and just exposed as a simple network service with an endpoint for you to call. The Caching service is a highly available multitenant service with no management overhead for its users

As a user, what you get is a secure Windows Communication Foundation (WCF) endpoint to talk to and the amount of usable memory you need for your application and APIs for the cache client to call in to store and retrieve data.

The Caching service does the job of pooling in memory from the distributed cluster of machines it’s running and managing to provide the amount of usable memory you need. As a result, it also automatically provides the flexibility to scale up or down based on your cache needs with a simple change in the configuration.

Are there any variations in the types of Cache’s available?

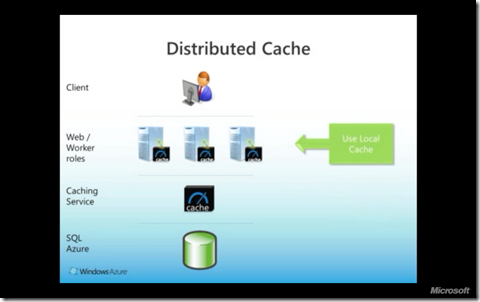

Yes, apart from using the cache on the Caching service there is also the ability to cache a subset of the data that resides in the distributed cache servers, directly on the client—the Web server running your website. This feature is popularly referred to as the local cache, and it’s enabled with a simple configuration setting that allows you to specify the number of objects you wish to store and the timeout settings to invalidate the cache.

What can I cache?

You can pretty much keep any object in the cache: text, data, blobs, CLR objects and so on. There’s no restriction on the size of the object, either. Hence, whether you’re storing explicit objects in cache or storing session state, the object size is not a consideration to choose whether you can use the Caching service in your application.

However, the cache is not a database! —a SQL database is optimized for a different set of patterns than the cache tier is designed for. In most cases, both are needed and can be paired to provide the best performance and access patterns while keeping the costs low.

How can I use it?

- For explicit programming against the cache APIs, include the cache client assembly in your application from the SDK and you can start making GET/PUT calls to store and retrieve data from the cache.

- For higher-level scenarios that in turn use the cache, you need to include the ASP.NET session state provider for the Caching service and interact with the session state APIs instead of interacting with the caching APIs. The session state provider does the heavy lifting of calling the appropriate caching APIs to maintain the session state in the cache tier. This is a good way for you to store information like user preferences, shopping cart, game-browsing history and so on in the session state without writing a single line of cache code.

When should I use it?

A common problem that application developers and architects have to deal with is the lack of guarantee that a client will always be routed to the same server that served the previous request.

When these sessions can’t be sticky, you’ll need to decide what to store in session state and how to bounce requests between servers to work around the lack of sticky sessions. The cache offers a compelling alternative to storing any shared state across multiple compute nodes. (These nodes would be Web servers in this example, but the same issues apply to any shared compute tier scenario.) The shared state is consistently maintained automatically by the cache tier for access by all clients, and at the same time there’s no overhead or latency of having to write it to a disk (database or files).

How long does the cache store content?

Both the Azure and the Windows Server AppFabric Caching Service use various techniques to remove data from the cache automatically: expiration and eviction. A cache has a default timeout associated with it after which an item expires and is removed automatically from the cache.

This default timeout may be overridden when items are added to the cache. The local cache similarly has an expiration timeout.

Eviction refers to the process of removing items because the cache is running out of memory. A least-recently used algorithm is used to remove items when cache memory comes under pressure – this eviction is independent of timeout.

What does it mean to me as a Developer?

One thing to note about the Caching service is that it’s an explicit cache that you write to and have full control over. It’s not a transparent cache layer on top of your database or storage. This has the benefit of providing full control over what data gets stored and managed in the cache, but also means you have to program against the cache as a separate data store using the cache APIs.

This pattern is typically referred to as the cache-aside, where you first load data into the cache and then check if it exists there for retrieving and, only when it’s not available there, you explicitly read the data from the data tier. So, as a developer, you need to learn the cache programming model, the APIs, and common tips and tricks to make your usage of cache efficient.

What does it mean to me as an Architect?

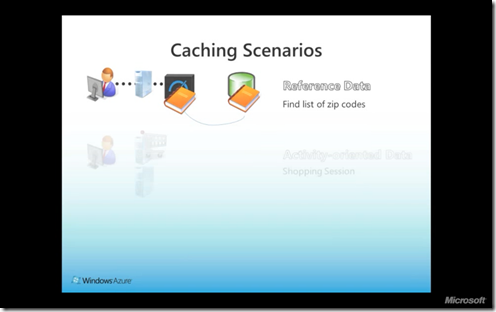

What data should you put in the cache? The answer varies significantly with the overall design of your application. When we talk about data for caching scenarios, usually we break it into the data types and access patterns

- Reference Data( Shared Read Data):-Reference data is a great candidate for keeping in the local cache or co-located with the client

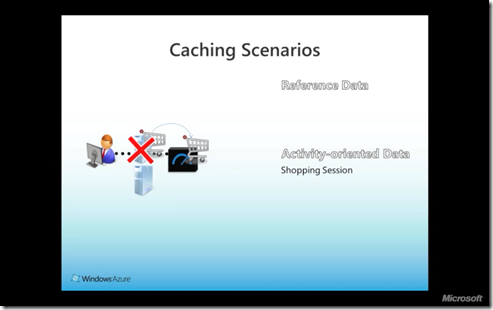

- Activity Data( Exclusive Write):- Data relevant to the current session between the user and the application.

Take for example a shopping cart!During the buying session, the shopping cart is cached and updated with selected products. The shopping cart is visible and available only to the buying transaction. Upon checkout, as soon as the payment is applied, the shopping cart is retired from the cache to a data source application for additional processing.

Such an collection of data would be best stored in the Cache Server providing access to all the distributed servers which can send updates to the shopping cart . If this cache were stored at the local cache then it would get lost.

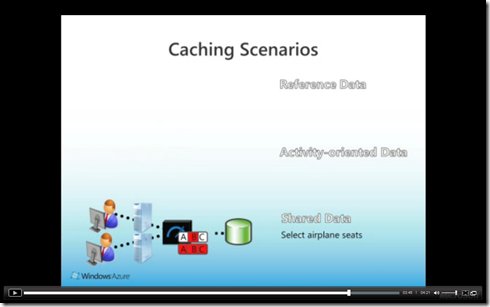

- Shared Data(Multiple Read and Write):-There is also data that is shared, concurrently read and written into, and accessed by lots of transactions. Such data is known as resource data.

Depending upon the situation, Caching shared data on a single computer can provide some performance improvements but for large-scale auctions, a single cache cannot provide the required scale or availability. For this purpose, some types of data can be partitioned and replicated in multiple caches across the distributed cache

Be sure to spend enough time in capacity planning for your cache. Number of objects, size of each object, frequency of access of each object and pattern for accessing these objects are all critical in not only determining how much cache you need for your application, but also on which layers to optimize for (local cache, network, cache tier, using regions and tags, and so on).

If you have a large number of small objects, and you don’t optimize for how frequently and how many objects you fetch, you can easily get your app to be network-bound.

Also Microsoft will soon release the pricing for using the caching service so obviously you need to ensure usage of the Caching service is “Optimized” and when it comes to the cloud “Optimized= Performance +Cost”!!

Hope this helps you understand this new term better wrt Azure.

Until Next Time

Cennest! “We can help you move to the cloud!”

References: